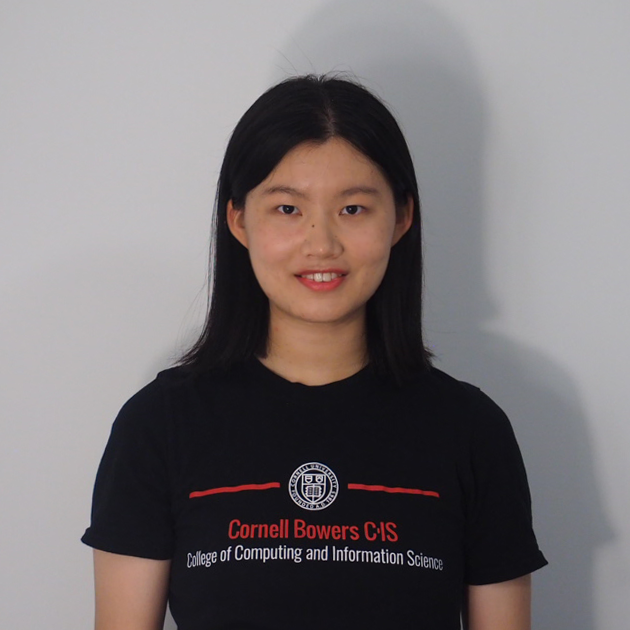

I am a second year CS Ph.D. student at Princeton University working with Professors Adam Finkelstein and Felix Heide. My research spans graphics, vision, and HCI, with a focus on AI for content creation and computational photography. I am interested in exploring methods that combine mathematical models of both problems in image processing and user experience to tackle new applications.

Previously, I completed my undergraduate studies at Cornell University, majoring in Computer Science and minoring in Psychology. I was fortunate to be advised by Professor Abe Davis and spent two wonderful years with the Cornell Vision & Graphics Group, where I became good friends with the Lab Cat.